Clinical Research

(CR-001) Pressure Injury Risk Prediction with Machine Learning and Explainable Artificial Intelligence

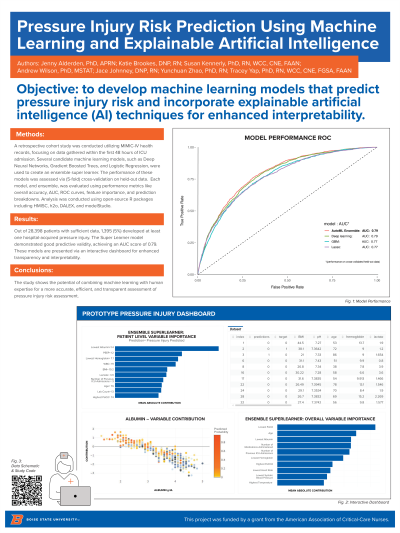

The potential to combine data-driven machine learning (ML) and human feedback for precise, efficient, and reliable pressure injury risk assessment is immense. To achieve this, models must be accurate, transparent, and interpretable. This study aims to create ML models for predicting pressure injury risk while incorporating explainable artificial intelligence (XAI) techniques to improve both overall (global) and individual (local) model interpretability.

Methods:

This retrospective cohort study analyzed health record data from the Medical Information Mart for Critical Care (MIMIC-IV), a longitudinal database of de-identified adult ICU patient records from Beth Israel Deaconess Hospital between 2008-2019.1 Predictor variables were selected based on Coleman and colleagues’ conceptual framework2 and limited to events within 48 hours of admission. The hospital-acquired pressure injury outcome included stages 1-4, unstageable, and deep tissue injuries. All hospital-acquired pressure injury stages were The ensemble (Super Learner) algorithm was used to predict outcomes and evaluate model performance (accuracy, sensitivity, specificity, F1-scores, and AUC) by 5-fold cross-validation. The following open-source R software packages were used for the analyses: HMISC for missingness patterns, h2o for predictions, DALEX for 'explainers,' and modelStudio for interactive dashboards. Data were considered sufficient if the patient had at least one vital sign measurement in the first 48 hours.The code will be made publicly available through the Open Science Foundation (OSF) to ensure transparency and reproducibility.

Results:

Among the 28,398 (of the 50,920 total) patients included in the analytic sample for having sufficient available data (defined as one set of vital signs in the first 48 hours), 1,395 (5%) developed at least one hospital-acquired pressure injury. The super learner model demonstrated good predictive validity (AUC= 0.79). The predictive models were presented via an interactive dashboard to enable transparency and interpretability at the population, sub-population, and individual patient levels.

Discussion:

Transparent and interpretable ML models provide a platform for clinicians to contribute their expert knowledge, thereby enhancing the models' predictive capabilities. This integration of human expertise into machine learning, known as expert-augmented machine learning, offers a promising avenue for future enhancements of pressure injury risk prediction models.

Trademarked Items:

References: 1.Johnson, A. E. W., Bulgarelli, L., Shen, L., Gayles, A., Shammout, A., Horng, S., Pollard, T. J., Hao, S., Moody, B., Gow, B., Lehman, L. H., Celi, L. A., & Mark, R. G. (2023). MIMIC-IV, a freely accessible electronic health record dataset. Scientific data, 10(1), 1. https://doi.org/10.1038/s41597-022-01899-x.

2.Coleman, S., Nixon, J., Keen, J., Wilson, L., McGinnis, E., Dealey, C., Stubbs, N., Farrin, A., Dowding, D., Schols, J. M., Cuddigan, J., Berlowitz, D., Jude, E., Vowden, P., Schoonhoven, L., Bader, D. L., Gefen, A., Oomens, C. W., & Nelson, E. A. (2014). A new pressure ulcer conceptual framework. Journal of advanced nursing, 70(10), 2222–2234. https://doi.org/10.1111/jan.12405.

.png)